Audit delete and access files and records events in a log file with Powershell

I think many faced with the problem, when You come and ask: "we've Got a file missing on the shared resource, and had not become, it seems someone deleted, You can check who did it?" In the best case, you say that you have no time, in the worst trying to find in the logs a mention of this file. And when enabled file auditing on the file server, logs, to put in mildly, "well really big" and find something there- unreal.

Here I am, after another such question (well backups are made several times a day) and my response, "I don't know who did it, but the file You are restoring", I decided that I was fundamentally not happy...

get Started.

To start, turn to group policy to audit access to files and folders.

Local security policy->advanced Configuration of security policies- > object Access

Enable the "Audit file system" on success and failure.

After that, we need the folder, you must configure auditing.

We pass to the properties of the shared folder on the file server, go to the "Security" tab, click "Advanced", go to the tab "Audit", click "Edit" and "Add". Select the user for which to audit. I recommend to choose "All", otherwise meaningless. The level of use "For this folder and its subfolders and files".

Choose actions on which we want to audit. I chose the "Create files/append data" the Success/Failure, "Create folders/append data" the Success/failure, Deleting subfolders and files, delete, also on the Success/Failure.

Click OK. Wait for the application audit policies on all files. Then in the security event log will appear a lot of events access to files and folders. The number of events in direct proportion depends on the number of users working with the shared resource, and, of course, from activity usage.

Thus, the data we already have in the logs, it only remains to get them out, and only those that interest us, without the extra "water". Then akuratno row by row to put our data into a text file separating the data symbols of tabulation, to further, for example, to access their tabular editor.

the

#Set the period during which we will run once script, and we need to look for events. Here, given period - 1 hour. Ie checked all the events for the last hour.

$time = (get-date) - (new-timespan -min 60)

#$BodyL - variable to write to the log file

$BodyL = ""

#$Body variable, which records ALL the events with the correct ID.

$Body = Get-WinEvent -FilterHashtable @{LogName="Security";ID=4663;StartTime=$Time}|where{ ([xml]$_.ToXml()).Event.EventData.Data |where {$_.name-eq "ObjectName"}|where {($_.'#text') -notmatch ".*tmp"} |where {($_.'#text') -notmatch ".*~lock*"}|where {($_.'#text') -notmatch ".*~$*"}} |select TimeCreated, @{n="Fail";e={([xml]$_.ToXml()).Event.EventData.Data | ? {$_.Name-eq "ObjectName"} | %{$_.'#text'}}},@{n="Polzovatel";e={([xml]$_.ToXml()).Event.EventData.Data | ? {$_.Name-eq "SubjectUserName"} | %{$_.'#text'}}} |sort TimeCreated

#Then in the loop check whether the event contains a certain word (for example the name of balls, for example: Secret)

foreach ($bod in $body){

if ($Body -match ".*Secret*"){

#If it does, then write to the variable $BodyL data in the first place: time, full path of the file, the name of the user. #At the end of lines translate the carriage to a new position to write the next line with data on a new file. And so #as long as the variable $BodyL will not contain all the data about the accesses to user files.

$BodyL=$BodyL+$Bod.TimeCreated+"`t"+$Bod.Fail+"`t"+$Bod.Polzovatel+"`n"

}

}

#Since entries can be very much (depending on activity of usage of the shared resource), it is better to split the log #. Every day a new log. Log name consists of the Name AccessFile and date: day, month, year.

$Day = $time.day

$Month = $Time.Month

$Year = $Time.Year

$name = "AccessFile-"+$Day+"-"+$Month+"-"+$Year+".txt"

$Outfile = "\serverServerLogFilesAccessFileLog"+$name

#Write our variable with all the data for the last hour in the log file.

$BodyL | out-file $Outfile -append

now a very interesting script.

The script writes a log on deleted files.

the

#the Variable $Time then has the same function as in the previous script.

$time = (get-date) - (new-timespan -min 60)

#$Events - contains the time and sequence number of the record event ID=4660. And sort by ordinal.

#!!!!This is an important remark!!! When you delete a file it creates 2 records with ID=4660 & ID=4663.

$Events = Get-WinEvent -FilterHashtable @{LogName="Security";ID=4660;StartTime=$time} | Select TimeCreated,@{n="Record";e={([xml]$_.ToXml()).Event.System.EventRecordID}} |sort Record

#The most important search commands. Describe the principle below, after the listing of the script.

$BodyL = ""

$TimeSpan = new-TimeSpan -sec 1

foreach($event in $events){

$PrevEvent = $Event.Entry

$PrevEvent = $PrevEvent - 1

$TimeEvent = $Event.TimeCreated

$TimeEventEnd = $TimeEvent+$TimeSpan

$TimeEventStart = $TimeEvent- (new-timespan -sec 1)

$Body = Get-WinEvent -FilterHashtable @{LogName="Security";ID=4663;StartTime=$TimeEventStart;EndTime=$TimeEventEnd} |where {([xml]$_.ToXml()).Event.System.EventRecordID -match "$PrevEvent"}|where{ ([xml]$_.ToXml()).Event.EventData.Data |where {$_.name-eq "ObjectName"}|where {($_.'#text') -notmatch ".*tmp"} |where {($_.'#text') -notmatch ".*~lock*"}|where {($_.'#text') -notmatch ".*~$*"}} |select TimeCreated, @{n="Fail";e={([xml]$_.ToXml()).Event.EventData.Data | ? {$_.Name-eq "ObjectName"} | %{$_.'#text'}}},@{n="Polzovatel";e={([xml]$_.ToXml()).Event.EventData.Data | ? {$_.Name-eq "SubjectUserName"} | %{$_.'#text'}}}

if ($Body -match ".*Secret*"){

$BodyL=$BodyL+$Body.TimeCreated+"`t"+$Body.Fail+"`t"+$Body.Polzovatel+"`n"

}

}

$Month = $Time.Month

$Year = $Time.Year

$name = "DeletedFiles-"+$Month+"-"+$Year+".txt"

$Outfile = "\serverServerLogFilesDeletedFileslog"+$name

$BodyL | out-file $Outfile -append

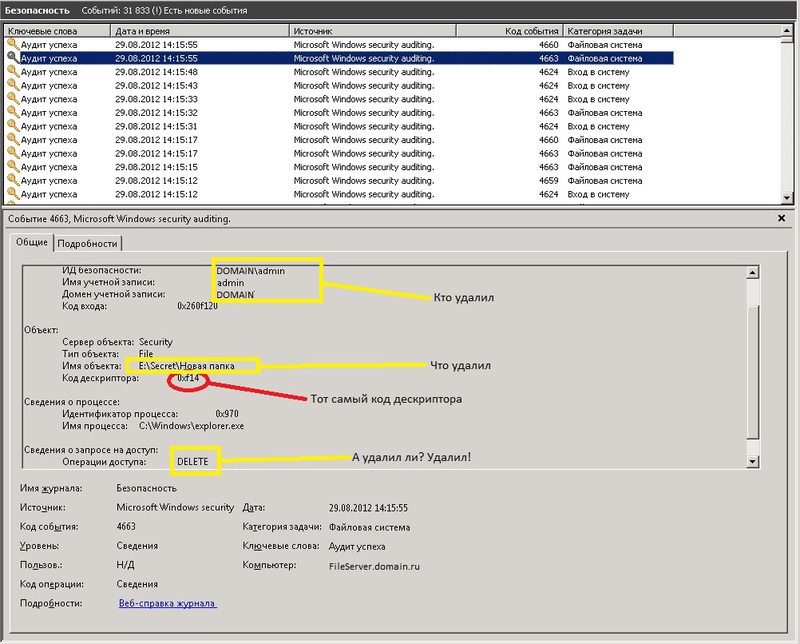

As it turned out when you remove files and deletion of descriptors is created the same event in the log, under ID=4663. In the body of the message can be different values "access": data Write (or add file), DELETE etc.

Of course we are interested in the DELETE operation. But that's not all. Most interesting is the fact that, under conventional rename a file it creates 2 events with ID 4663, the first access Operation: DELETE, and second with operation: data Write (or add file). So if you just take away 4663 we will have a lot of misleading information: where to get the files and just renamed and deleted.

However, I noticed that when you explicitly delete a file, it creates another event with ID 4660, which, if you examine the body of the message contains the user name and still a lot of proprietary information, but no file name. But there is a code descriptor.

However, preceding this event was an event with ID 4663. Where just the same, and specify the file name and the user name and time, and the operation is not strange there are DELETE. And most importantly there is a room descriptor that matches the descriptor number of events above (4660, remember? which is created by explicitly deleting the file). So now to know exactly what files are removed, you simply need to find all events with ID 4660, as well as prior to each this event, the event ID 4663, which will contain the number of the desired descriptor.

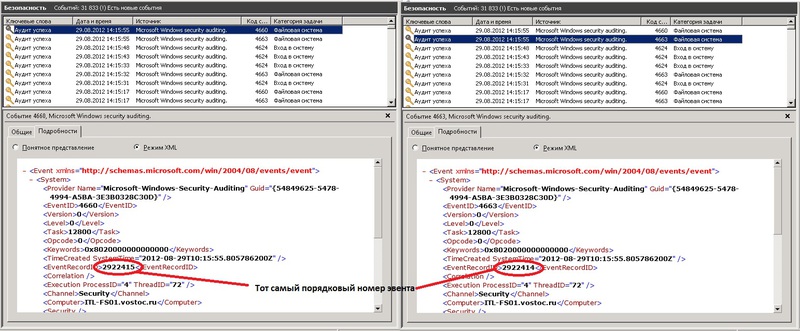

These 2 events are generated simultaneously when you delete a file, but are written sequentially, first, 4663, then 4660. While their numbers differ by one. 4660 have a sequence number one greater than the 4663.

It is on this property and search the required event.

Ie take all events with ID 4660. They have taken 2 properties, the creation time and sequence number.

Next, in a loop one by one takes each event 4660. Select its properties, time, and sequence number.

Further, in the variable $PrevEvent records the number of the desired event, which contains necessary information about the remote file. But in the same time frame in which to search for the event with a specified serial number (the one we brought in $PrevEvent). Because the event generated almost simultaneously, the search will reduce to 2 seconds: + — 1 second.

(Yes, that's +1 h, -1 h, why exactly can't say it was experimentally identified, if not add a second, some may not find maybe due to the fact that perhaps these two events can be generated one before the other later and Vice versa).

It is in this time interval is searched for the event with the required sequence number.

After it was found that the filter works:

the

|where{ ([xml]$_.ToXml()).Event.EventData.Data |where {$_.name-eq "ObjectName"}|where {($_.'#text') -notmatch ".*tmp"} |where {($_.'#text') -notmatch ".*~lock*"}|where {($_.'#text') -notmatch ".*~$*"}}

Ie do not record information about deleted temporary files (.*tmp), the lock files MS Office documents (.*lock), and temporary MS Office files (.*~$*)

In the same way take the required fields from this event, and write them into the variable $BodyL.

After finding all of the events, write $BodyL to a text log file.

For the log of the deleted files, I use the scheme: one file for one month with a name containing the month number and year). Because deleted file at times less than files to which you have access.

In the end, instead of endless "digging" for the log in search of the truth, you can open the log file with any table editor to view the needed data by user or file.

Recommendations

You will have to determine the time during which you will be looking for events of interest. The longer the period, the longer looking for. It all depends on server performance. If weak, then start with 10 minutes. Let's see how quickly will work. If more than 10 minutes, then either zoom in more, maybe it'll help, or on the contrary reduce the period to 5 minutes.

Once you define the time period. Place this script in the task scheduler and specify that you deploy this script to every 5,10,60 minutes (depending on which period you specified in the script). I have provided every 60 minutes. $time = (get-date) — (new-timespan -min 60).

PS

I have both of these scripts work for the network resource in 100GB, which daily are actively working an average of 50 users.

Time search remote files for an hour — 10-15 minutes.

While searching for all files that were access — from 3 to 10 minutes. Depending on server load.

Комментарии

Отправить комментарий