The search architecture in Booking.com

the conference HighLoad++ 2016 Ivan Kruglov told about how the service Booking.com develop your search — one of the Central functions of the Internet booking of hotels.

Hello! I'm Vania, I write in Perl, I can sympathize. [Light laughter from the stage.]

Okay. Seriously, my name is Ivan Kruglov, I'm from Booking.com from the city of Amsterdam. Where I work the last 4 years where the last year and a half I worked on the team that makes our search better.

I want to start a bit from afar. But with the phrase:

Don't be surprised if you don't know the author, my colleague Eduardo Shiota. Why I want to show it? In my opinion, it accurately reflects the development culture Booking.com. Its essence is that we need to provide a better experience, a better experience, to grow and to adapt quickly to the needs of our customers.

There are several components, I want to briefly run through each of them, at the same time to tell you about Booking.com. We first presented at HighLoad++. I think you will be interested.

the

Statistics

Let's start with growth. We grow like this:

Blue graph is the number of units that currently is in the database. Accommodation — hotels, villas, apartments and so on. At the moment there are about 1 million. The orange line is the number of nights booked every day. Through Booking.com daily booked over 1 million nights.

The second is a quick adaptation. To adapt, we need to understand what your client wants. How to do it? We use this method: we make some observation, then we build a hypothesis why this is so, and this hypothesis is testable. If something went wrong, so our observation is wrong, or suffer its interpretation. Go Pixim, try again. Such variants most. If our audit showed "everything is okay", then all is well, you can move on to the next observation.

The mechanism that we use to confirm these hypotheses is a/B testing, or experiments. Experiments allow us with some statistical accuracy to say Yes or no.

Experiments are many, they are different. Experiments are such, when we change something graphical on the website:

A classic example is the color of the buttons, or added or changed conocco, or added a new feature, some unit, a new menu and so on. This is something that is visible to the user.

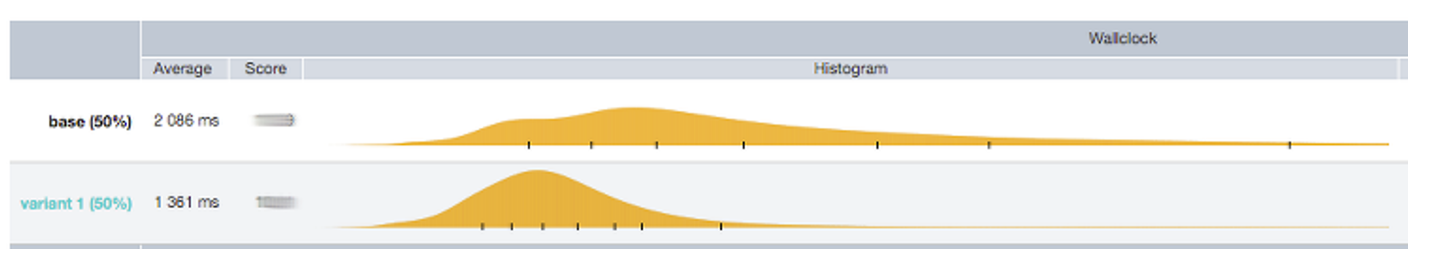

The second type of experiment is when something changed inside. For example, we have a new API or some new service, or are we just upgraded some package. In this case, we want to collect quantitative characteristics. Here on the slide is the distribution of the response time. The top is what happened. At the bottom, that was.

The next and most important point — we want to reiterate that our user from our innovations do not become worse, i.e. his experience is not deteriorated.

There is another type of experiment, which is very extensive and covers everything. Conventionally, it can be represented as follows:

What I want to say here? Clearly, I exaggerate a little, nobody so just code in production is not pushes. But testing is not — it is very minimal, it executes the developer.

We have a very good monitoring, is there a good experiment tool, which allows you not only to run our experiment on a part of traffic, but if something happened, we can quickly understand, to extinguish the fire and move on. Plus we have error budget tolerance to errors, which significantly reduces moral burden on the developer. Set of these factors allows us to dedicate more attention to the business side of the issue than the quality of its implementation.

If you count the number of experiments that are currently running in Booking.com they will get more than a thousand. This number of experiments need to write to deploy. In preparation for the report, I looked at the statistics over the last year. It turned out that on average, we do about 70 deploi a day. If it is put on a standard eight-hour day, it turns out that some part of the website Booking.com changes every 5-10 minutes.

Best experience

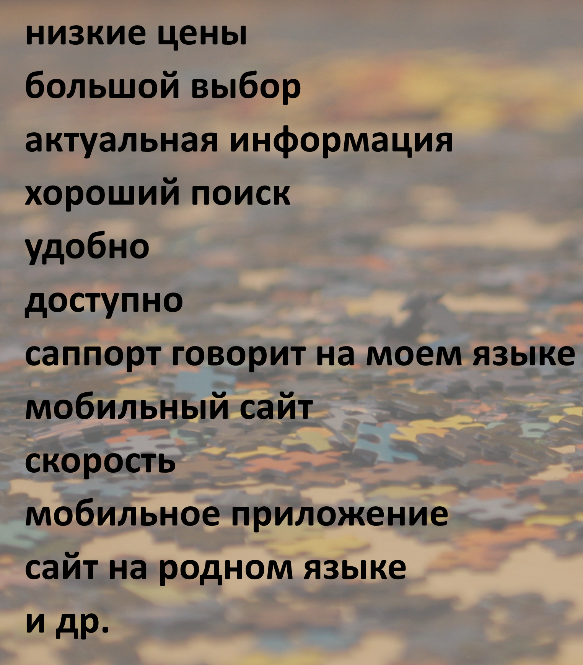

So our user gets a better experience, the entire company — not just the IT Department — needs to collect a large puzzle. In this puzzle there are many, some less obvious, some more obvious, some less important, some importance. For example, the list might look like this:

It is clear that the list is incomplete, just an example. One big obvious point that there needs to be is a good search, which, in turn, must provide two things: it needs to be fast, it needs to give current information. In my report I will tell you about these two things: speed and timeliness of the information.

Talk a little bit about speed. Why speed is important? Why do we make our sites faster?

Someone does to compete with its competitors. Others are doing to customers they are not left, was more the conversion. If we ask Google, it will give us a lot of articles about it will speak. It's all so, Booking.com we can do all this. But we are investing another interesting component.

Let's imagine that we have a conditional search page, which conventionally takes two seconds. Imagine that these two seconds is our threshold, after which our client getting bad. If through our search page is our main search logic takes 90% of the time, then all features in all other experiments only 10% of the time. If we suddenly launched a heavy experiment, that he can push beyond two seconds.

If we did a quick, search began to occupy only 50% of the time, we have freed a lot of time on new features, new experiments. One of the things why we do in Booking.com quickly — we want to see the time in experiments, under features.

the

Search

Then we talk about the search and what was special about it. We will talk about the evolution of search, about the current architecture and the conclusion.

I want to start with an example. Let's imagine that we have a guest who wants to go to Paris. He can go alone, with family, with friends, in the car if he lives nearby (then it is desirable that he was Parking where he's staying), and he can go by public transport (then, it would be nice if the stop was close). And of course, he wants Breakfast. Task Booking.com to help him find a temporary place to stay that satisfies all his requirements.

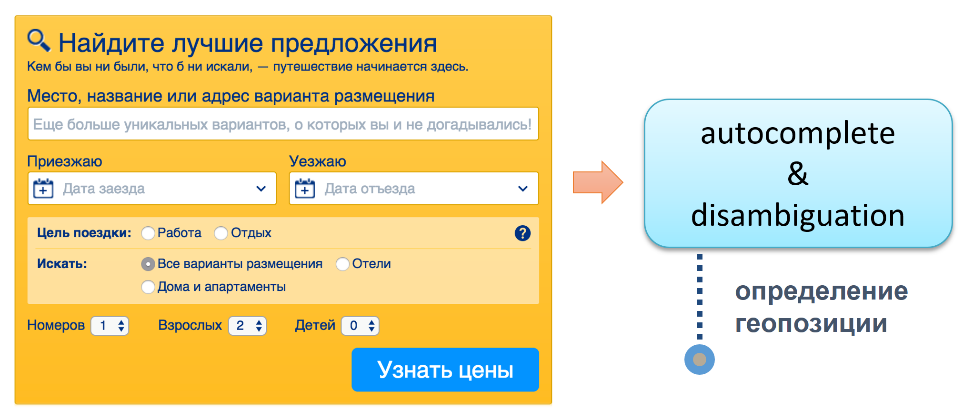

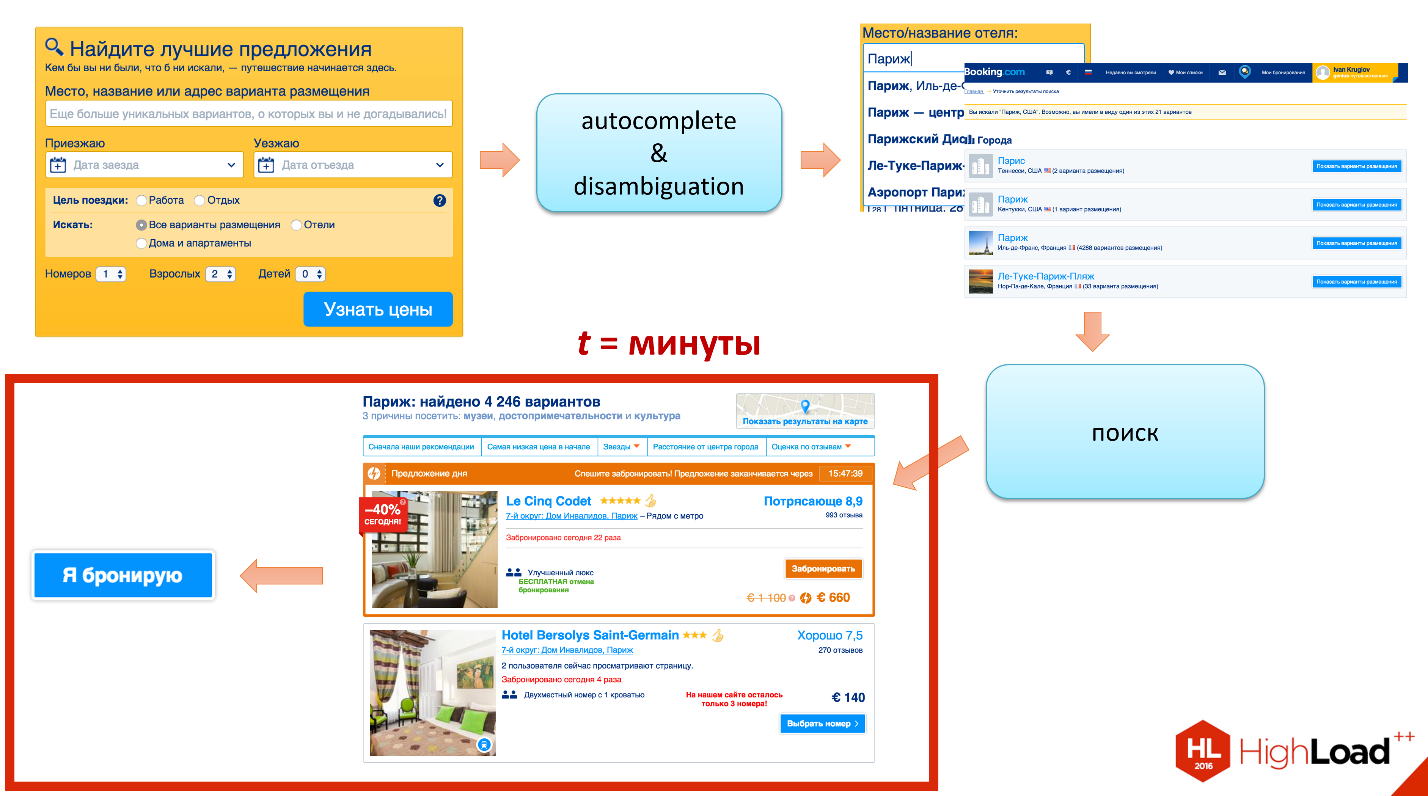

How is the interaction of our guest is with the site? He first visits the homepage. There is a form, I think you all know her. He drives Paris. He immediately begins to interact with the service autocomplete & disambiguation (clarification of ambiguities), the purpose of which is to help us understand what type of location he has in mind.

The fact is that if you just search the word "Paris", it turns out that Paris worldwide about 30 pieces. For example, the village of Paris, Kiginskiy rayon, Republic of Bashkortostan, Russia. This is hardly what he had in mind. There is still a village in Belarus, even two, one island of the Pacific ocean, about 10-15 are in the United States.

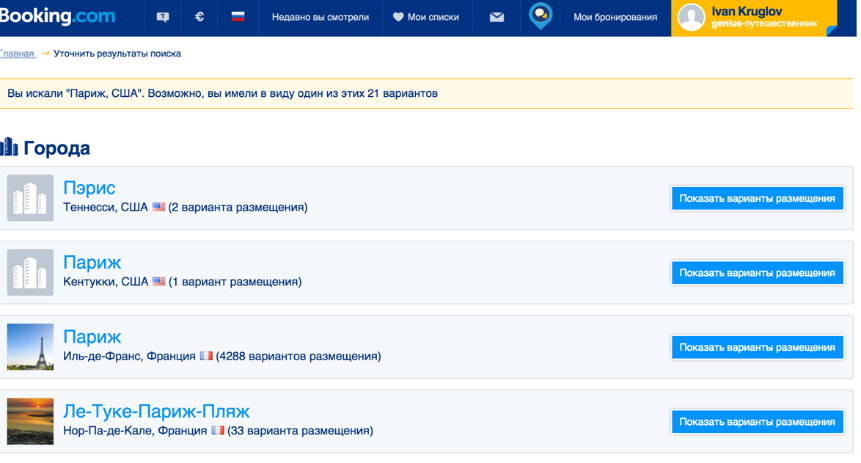

Our user starts to seem like a list where he can choose what this refers to.

If there is no item in the list, then our guest will be redirected to disambiguation, where essentially the same data, only the list a bit more — a couple of dozen elements.

As soon as our guest explained what he wants (I want the one in Paris), formed search query in the search logic that does the following:

the

-

the

- It's picks out the hotel by attributes, for example, filter hotels that have no Parking and no Breakfast. the

- Then it does, if necessary, group fit. If our guest traveling with family, a big family of 6 people, but we have no room that accommodates 6 people, we can try to play: 3+3, 4+2, 5+1. the

- Next is selection of hotels, according to availability. the

- And eventually the ranking.

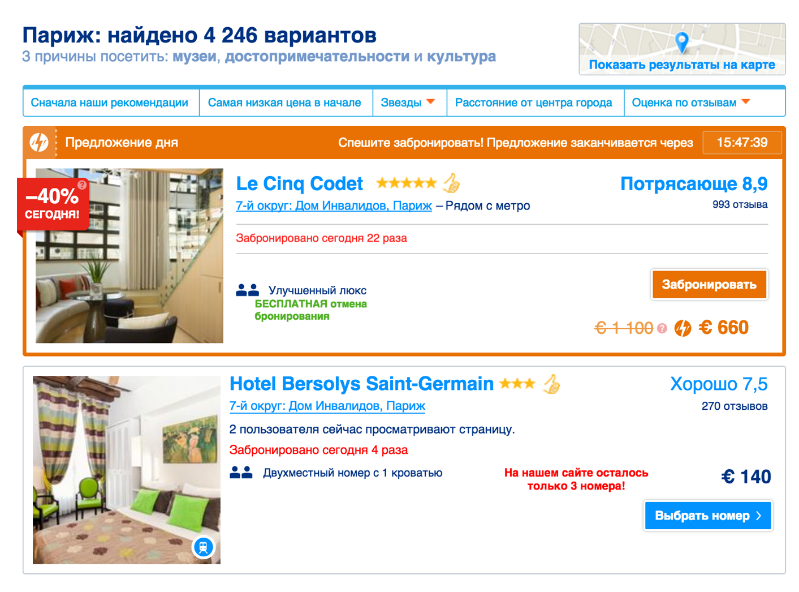

When the search engine worked, formed a search page where our guest read the description and review, looking at prices, choose. In the end, goes to the final stage – booking. Have Booking.com — new reservation, success, all good.

Here I want to make two digressions. First — my further report will be about the square of the search will focus on the search logic of what is happening there inside. Second — I have already mentioned the selection on accessibility. Let me tell you what I mean, to be clear.

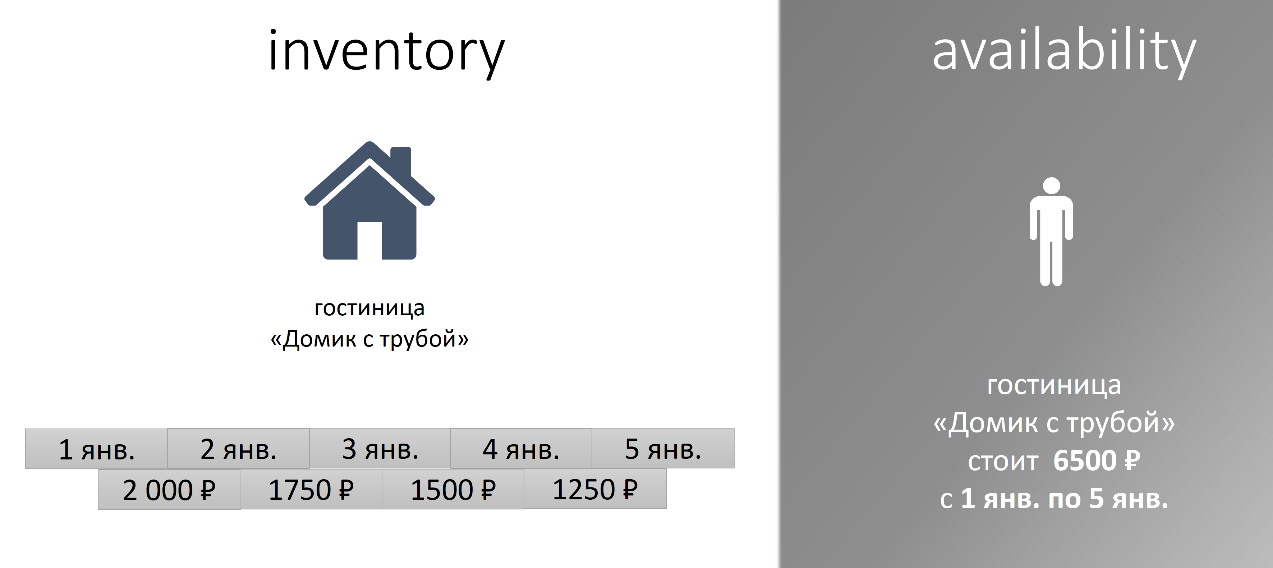

Here it is necessary to define two terms. The first is inventory, presence. The second availability accessibility. What's the difference?

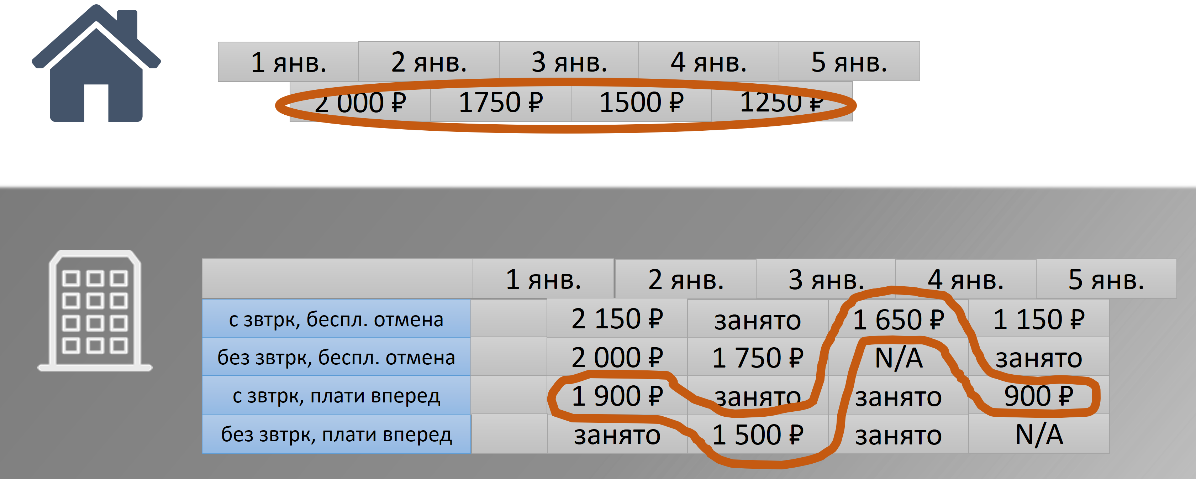

Let's imagine that we have present "a House with a pipe". It has one room, and its owner wants to pass this gift on new year holidays. He has set these prices:

On 1 and 2 January 2000 ₽, from 2 to 3 – 1750 ₽, and so on. This is the data that you're working with our hotel. Hotel, room, date, price.

From the point of view of our guest, it looks a little different. He thinks: "I want to stay in the present "a House with a trumpet" from 1 to 5 January, the price for me will be 6500 ₽". The data is the same, the performance is a little different. The transition between these representations is not always trivial.

In this case, it is simple, we just take them all and summarize. But if we have a big hotel with lots of rooms, many tariffs, many a politician, any number may be employed, some policies may not be available? The result is a non-trivial function of its price.

the

the Evolution of search

With the introduction finished, with a search defined, the terminology I introduced. Went to hardcore.

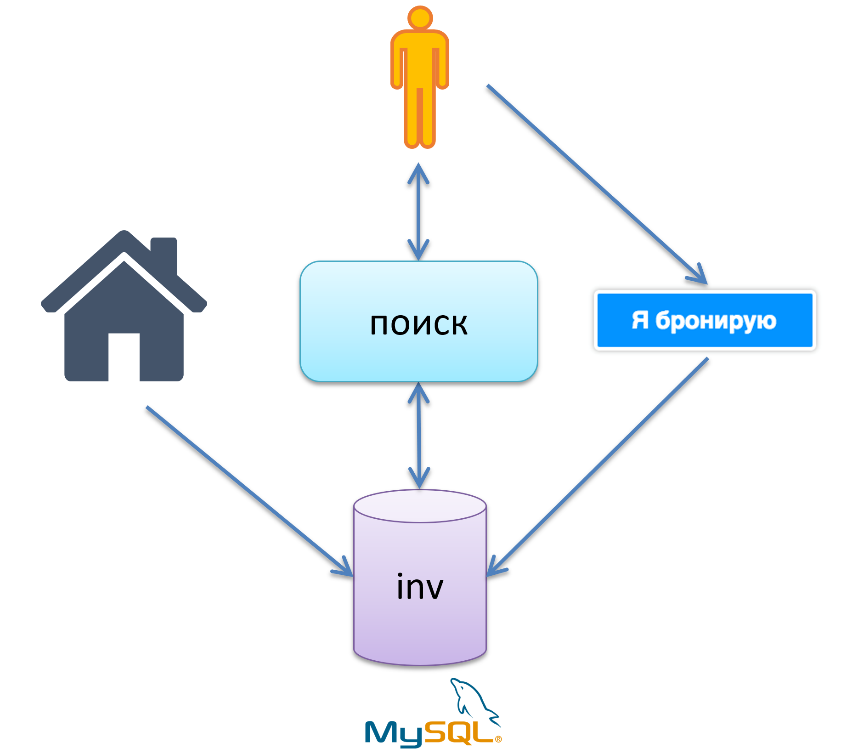

In ancient times, when our base was less than 100 thousand hotels Booking.com used warm LAMP-like stack. LAMP — Linux, Apache, MySQL and P — not PHP, and Perl. Also Booking.com used a monolithic architecture. Business processes were as follows:

Our hotel. There is a base inventory in MySQL, the hotel carries the data: I like that hotel, I have this room, on these days this is my price. Next we have the search logic that pulls data from the inventory calculates availability and gives the search result to our guest. Then the guest goes out for "I book". The logic of this step is to inventory database and makes minus or minus to some recording saying that this number is no more.

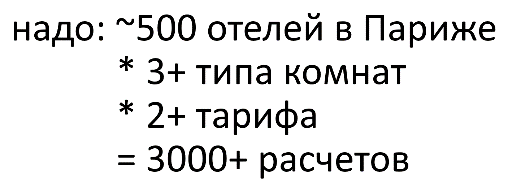

In the center of 2010, when our database was about 150 thousand hotels, the approach is exhausted. The problem was in a serious calculation of availability. This feature was very heavy. To better understand what was the pain, here's an example:

If at that time our database was 500 hotels, each in on average 3 rooms, 2 rate, in order for us to take a sample and sort it by price, we need about 3 million calculations. According to the archives, our stack could issue something like the following:

In one second could count only 1,000 prices to stay in one day and only 90 for stay of 30 days. The more our length of stay, the more options we have to sort out.

By the way, for this reason Booking.com some time in 2008, there was no sorting by price. I personally remember the first time I came to Amsterdam as a student. Was a bit of money, I want to find the cheapest hotel. I couldn't sort by price just for this very reason. Now it's all good.

What to do?

the

- Let's all materialize.

the First thing that came to mind colleagues – let's all secalinum. Not working. It so happened that max cache hit ratio was only 60%.

Let's rewrite of new technology. Decided not to do it. Why? First, monolithic architecture. That is, if you rewrite, then you need to rewrite most of it, it takes a very long time. Secondly, agility will suffer. Companies need to move forward. Let's see what is better? the

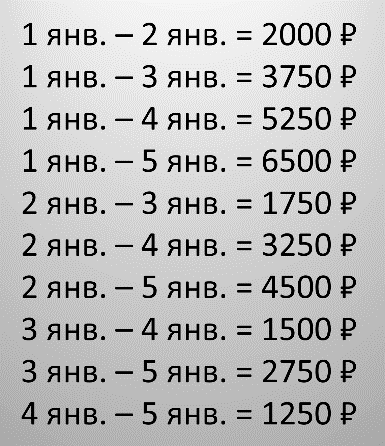

What is materialization? In this context, it is roughly as follows. Returning to the example, we take and just pretransitive all possible combinations of check-in and duration of stay. For example, from 1st to 2nd, 1st to 3rd, 1st to 4th, 1st to 5th, 2nd to 3rd, from 2nd to 4th, etc. Take and consider all in advance.

Get good performance because we have all been calculated in advance, it is only necessary to get this price. This is even the path that matters. We have no fast way when data is written from the cache, for example, and slow when they are not in the cache. The drawback is a huge amount of data. We need to save all these options.

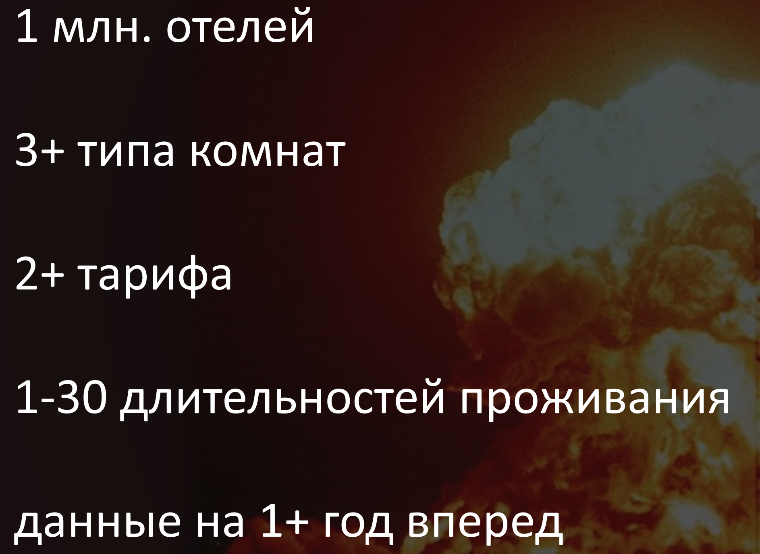

In this embodiment, and stopped. To understand how this huge amount of data, I will show you the current info:

At the moment 1 million hotels, 3 rooms, 2 tariffs, from 1 to 30 days duration of stay. (In Booking.com you can't book a hotel for 2 months, for a maximum of 30 days.) Data is considered for about a year and a half ahead. If you multiply all of these numbers, we get that Booking.com currently contains about 100 billion price.

the

Business processes in the case of materialization

Familiar hotel, a familiar base inventory. There is a new base availability and the process of materialization, which materializes the prices and puts them in a database availability. Search logic uses prekraschenie prices, and reservation logic still changes the data in the original database inventory. It turns out that inventory is our primary database in which lies the whole truth, and availability — some of its cache that always hit ratio is 100%.

With such a scheme there are two challenge. The most important thing: how to make so that not to spoil the user experience? How not to make it so that we first in search logic said that this hotel has such a number is, and then, when he moved to the reservation stage, said that it is not? We need to keep our two databases in a consistent form.

In order to solve this problem, made the following observation. Return you to the chart that I have shown how the interaction of the user with the service.

Look at this part when I search the given sample, the transition to the "I book". Here you can see that the time here carries a user takes minutes: 5-10 minutes until we read a review until we read the description. It turns out, when our guest moved to the stage of reservation, it may happen that the last room he wanted to book, already gone. There is some natural inconsistency of the business model.

Even if we make our two databases absolutely consistent, there will always be a percentage of errors at the stage of "I book" simply because that is the nature. We thought, why do we need to do then the data is consistent? Let's make sure that they were inconsistentname, but the level of error that arises from this inconsistency, is not above the threshold, which is in the power of business processes.

the

Pipeline

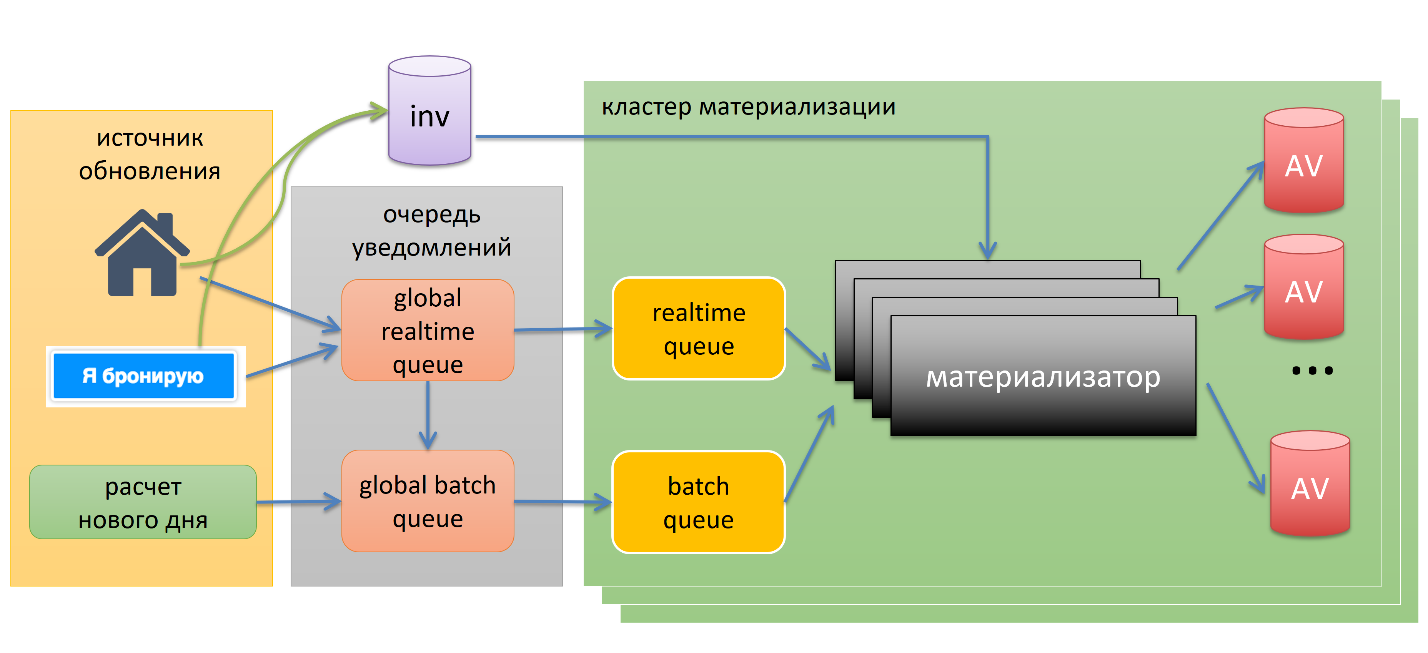

First we have the update sources. Always when they make a change in inventory, they send a notification to one of the global queues. The notification is, for example, "so-and-so hotel, had booked the room" or "so-and-so hotel changed the price".

Two queues, one realtime, the other batch it is backlog. This is done to set some priorities. If we have reservations happened tomorrow, it makes sense to count faster than your booking a year in advance.

Further, the notification flow into one of the clusters of materialization, where there are a lot of materialization. They are specially made over capacity, in the event of a problem we can quickly pounce on our turn, fast to calculate and fast to fold in the base availability. They pull data from the inventory database, detailing all the possible options and put in availability.

There is one interesting point. Little blue button "I'm booking" sends notification not only in the case of a successful reservation, but in the case of an unsuccessful reservation, in case of an error. In case of error we know that potentially there is some inconsistency — let's just in case her count, that is, a self-healing mechanism.

The last element is the calculation of a new day. Roughly speaking, availability is such a moving window with a size in a year and a half. Each day, we need to calculate one year and one day ahead, for example. They always go through a batch queue, for obvious reasons.

the

data Warehousing

A lot of data is dominated by read requests and search queries. Therefore making the reading. Used a cunning clustered primary key index stored in MySQL.

Why clustered? Why on location? Always, when the search is executed, it runs a group of hotels that are close to each other — is the property of locality. It would be nice if those hotels which are geographically close to reality, was close to the database. To our poor MySQL did not have to run on our drive in different directions. The more compact the data are on the disk, the better.

This used the technique of Z-order curve, won't it stop, it's all very simple. Read more at the link.

Doing sharding at check-in. Records many: one record in the inventory can cause thousands of change records in availability. So I had to use SSD hard drives are not holding the load. The load was 4 thousand IOPS.

the

Results

the

-

the

- has an acceleration calculating availability in 50-100 times. We do not need to consider all counted, just grab and pull need.

- has Got the time of the materialization of the norm in less than a minute. In practice, tens of seconds. the

- in order to verify that our initial assumption is valid, we overlaid the system from head to toe with all sorts of metrics and alerts. A key metric was the quality check. Quality check is some external process which takes a sample of availability, samples of inventory, based on the data availability considers and compares. If our data coincide, all is well. If there is data different, or there is no record and there too, we send a notification, some kind of alert. Such alerts always have, but it is critical that they do not go beyond a certain threshold. Materialization solved the problem for a long enough period of time. During this time little changed stack began to use uWSGI + Nginx + Perl + MySQL.

- Location: Paris; the

- Attributes: Parking, Breakfast and so on; the

- Check-in, check-out; the

- part of the "team" (for example, a family of 6 persons).

through clever MySQL clustered index got a quick cold start. All data lie close to each other, you need a few pages to pull from disk. the

In the area 2014 base was about half a million hotels, was the growth of the business, there are new features, there is a search by country and region. For example, in Italy — 100 thousand hotels.

We ran into the same problem, only from a different side. The problem lies in the fact that we have Perl, it is single-threaded. One request be handled by a single worker. Not able to digest it all these selections, sorting and so on.

What to do? Decided to parallelize the whole thing by Map-Reduce scheme. Write your Map-Reduce framework. Switched to service-oriented architecture. And got the following results: we have large queries become faster. Due to this, our request is beating on the smaller, he is sent to the worker, the worker believes their little part, sends data back to the master worker, it is all a matter merit and builds the final result.

Large queries are faster, but the search for peace began to take about 20 seconds. Still not very good, but better than it was. Contracultura is that small requests are slower. The reason for this large overhead IPS in particular, to serialize and transfer data between processes. Perl is single-threaded and serialize it we can only through many processes.

This is about the time when we began to think about changing the architecture, which led us to the architecture we use at the moment.

the

Current architecture

We realized that if we upgraded that we had to tweak, tweak, change the workflow, then in the trailer it can be made to work for some time. But the stack was close to exhaustion of their capabilities. Our camel little tired.

I wanted to abandon outdated approaches. The architecture was based on the approaches that lay the Foundation 5-10 years ago, when it was 50-100 thousand hotels in the database. The approaches that were used then, very poorly, when we have a base of 500k or even a million hotels like at the moment.

Wanted to keep the MapReduce, I wanted to keep the service-oriented architecture. Wanted our service to have quick access to availability and all other data necessary for the query. I wanted a quick database to which you can quickly write. Us for update availability. Wanted to have cheap concurrency.

Looked around. We liked Tarantool, we tried it. It was about a year and a half ago. But decided not to use it for the following reasons.

First of all we are much troubled that, if we go to Tarantool, we will have all the business logic to write in Lua. We don't know her very well, despite the fact that she does well. It's one thing when you have some kind of script, a small stored procedure, and another thing — all business logic in Lua. The second is the code we took it and immediately wrote in Lua, we have worked as quickly as we would like. We have a parallel implementation in Java. Java code run faster.

In the end, we decided that moving from Perl to Java. Java provides multithreading cheap, less than a constant factor. Java is in principle faster, I mean less overhead. Decided that all data is in-memory for quick access. Decided that moving from MySQL to RocksDB.

the

Architecture

In the center of it all is search node, it locally integrated database availability. This means that the database is in the same namespace as your process. This node is in-memory indexes are in-memory database that is persisted.

Nod a lot, they are United in a cluster. Row — shards, through the columns of the the replica. We use a static sharding, each node handles we assign which shard it belongs to. The number of shards such that all our data will fit in memory of nodes. We spread the data with a simple operation "division with remainder", hotel_ id mod N. All replicas are equivalent. We have no master, we are all peer, there is no interaction between nodes.

Now our search query falls into one of the focal points, a lot of them. The coordinator's task is to do scatter-gather, when we take the query and broadcast it to all the shards. Each shard can process their local data, sends the request back to the coordinator, who merit this data and creates the final result.

Inside the shards replica is chosen randomly. If a replica is unavailable, we take and try another. The coordinators constantly pinguet all nodes to understand the current status of our cluster.

In fact, this is the standard search engine same Google or Yandex work about the same. I have here the cherry in the form of availability, we need to update the built-in database, you need to update them in realtime, because availability changes constantly.

For this, we used our existing practices based on Perl and MySQL. Used the same Pipeline with a slight change: instead of writing data directly into the database, we wrote in the persisted queue availability. Why it materialized? We have within the black square of the materialization of all the queues were just notifications, that is, the orange line is the data itself, the meat itself.

How do we update the data availability? Each node, apart from anyone reading this takes place, applies the update to its local condition. We considered data once, which is very expensive, and used them many times. In this queue data is stored in the last hours. If a node behind, she could catch up.

With this scheme we obtained the cluster is eventually consistent. Ultimately, if all nodes do not work at the same speed, we will stop our changes, they all come to the same state.

We are happy with this situation. We here rely on the principle that we used when building materialization: we do not need to do in our base is absolutely consistent. We only need to ensure that this error rate does not go beyond the allowable value.

Here again, there are quality check, plus we use one metric: monitor each milk yield, watch how far she lagged behind the end of the queue. If she lagged behind too far, we take and pull it from the cluster. This is an automated process.

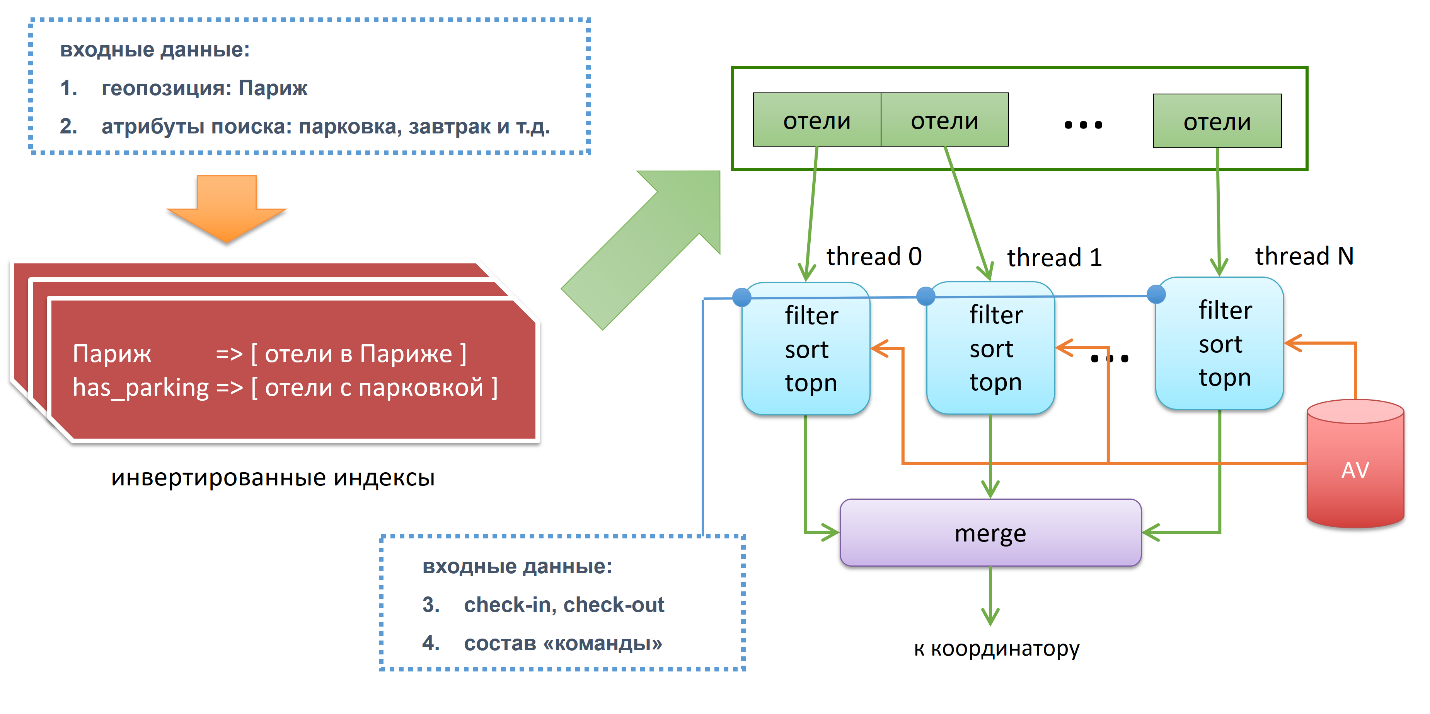

Let's see what's going on inside. We have the input:

-

the

At the entrance is the primary filtering those hotels that meet our criteria based on inverted indexes. We have the index key is the city, value is all the hotels of this city. For example, the Paris hotels located in Paris. There is a second list, for example, those hotels that have Parking. Further, if we cross these two lists — the operation is cheap and fast, we'll get to those hotels which in Paris and Parking.

He received a primary filtered list of hotels, divided it into pieces, each piece fed trade, which makes three steps. First, he makes a secondary filtering based on availability, it verifies this hotel if the room, if Yes then at what price. Also here is group fit. Then we go to the sorting stage. In the end, topn is calculated.

For example, if our search query, said, "I want the first page," on page 15 requests. That is, each of the threads will pull out only top15 and send the data to the master thread, which will make merge. Merge it is done as follows: takes the data from all n-threads, it turns out ntop15 and they make top15. Then sends the data to the coordinator, who in turn is waiting for the results from all shards. From each of the shards he got top15 and again makes the top15. It turns out cascading reducing data. The way it works inside.

I promised to tell you why we stayed on RocksDB. For this you need to answer two sub-questions. Why an embedded database? Why is RocksDB?

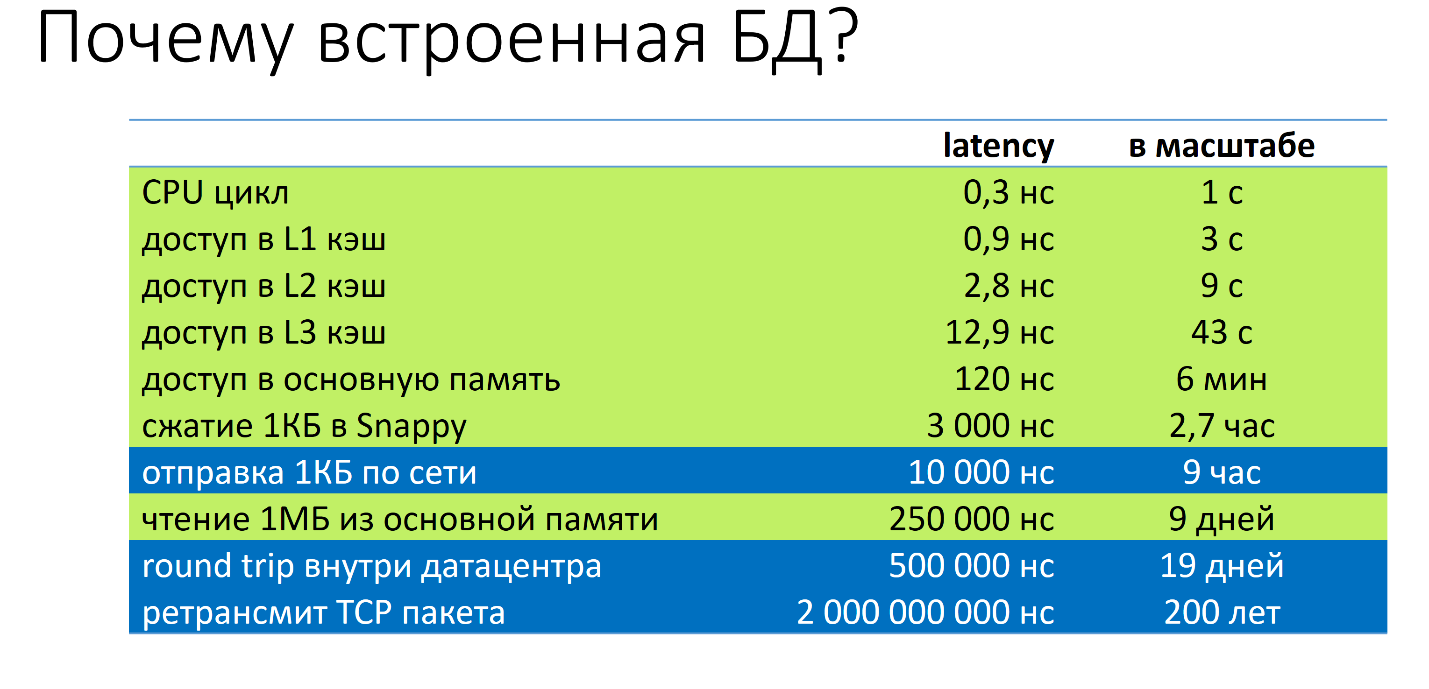

Why an embedded database? Want to show such a sign:

Here are some event from the world of servers and their latency. For better understanding I otmasštabiroval them to clear values. The fastest event is the CPU cycle. It is 0.3 nanosecond. What would have happened if it was 1 second? In this case, we have access to L1 cache is 3 seconds, access the L3 cache 43 seconds, access to main memory — 6 minutes to send one kilobyte of network — 9 hours round trip inside the data center — 19 days, retransmit TCP packet — 200 years.

If we are talking about embedded databases, we are talking about the properties that are closer to the top, which is highlighted in green. If we are talking about databases that go over the network — no matter what is a database, MySQL, Cassandra, whatever we are talking about the bottom line. That's why we chose built-in.

If you view the benchmarks RocksDB, which is on GitHub, if you view the benchmarks Tarantool, which has a built-in database, where they have transaction that is QPS (Queries per second), all measured in millions. This is one of those properties, why is this happening.

Why Is RocksDB? There is a very simple story. We needed a database that would sustain our load. Features any of us particularly want, just key-value, just store, get, delete. We tried different options: MapDB, Tokyo/Kyoto cabinet, leveldb. As tried? We just took put them in field conditions: dataset in pagecache, 80% read + 20% write, read significantly prevails. RocksDB showed us the most stable random read performance with random writes. Randomly recording is our update availability, and rednano reading is our search queries. We stayed right there.

Interesting point: the creators of RocksDB — Facebook. They position it as optimized SSD because of the write and space amplification. In practice, it works great on our regular hard drives. In our case, it holds fifteen hundred records per second.

We have received approximately the following:

Top plate – is the response time of the search service, what before and what after. It is seen that in some cases we have improved the speed of a search query is three orders of magnitude. More importantly, are faster for large queries (particularly the Adriatic coast, in which 30 thousand hotels), and small (Sofia, where all 300 hotels).

Bottom graph I showed you before, only not said that those are the results that we received when we introduced the current architecture. The top is what was the average time is approximately 2 seconds. Bottom graph is what became 1.3 seconds. We've improved the speed of page 700 milliseconds, which we can spend on new features, experiments. It is very good.

the

Conclusion

In conclusion: guys, everyone has their own way. See, try what suits you. The same test RocksDB — I specifically did not post any benchmarks because we took in our war, for our specific task, under our specific workload. We took the test, tried different options, and he just earned. In your case, it may not be suitable, try it, it takes not much time.

Second. Working on this project, I made a number of conclusions. First and foremost, speed is not only about conversion, it's about the fact that no matter how many features can you have on the page, how many experiments you can run on the page.

View for materialization. Modern process or a modern storage system can store a huge amount of information. The same 100 billion price, which I showed you is some measly 800 gigabytes. A maximum of 8 bytes per price. 800 GB intermeddle in the memory of the modern top of the server configurations, not to mention clusters of machines. This is all feasible, it all works. And it works very quickly because everything is counted.

Be sure to look at your business processes. Your business processes can tell you a lot, they can greatly simplify life. In our case, we say that we do not need to maintain data consistency between the two databases because the business process has inherent inconsistency. The main thing — that the level of error that was, did not go beyond a certain threshold.

That's all from me, thank you very much for coming! I hope I said something useful.

the Ivan Kruglov — the search Architecture in Booking.com

Комментарии

Отправить комментарий